[케라스] Fashion-Mnist 기반 CNN모델 코드

2020. 4. 28. 11:34ㆍ노트/Python : 프로그래밍

from keras.datasets import mnist

from keras.utils import np_utils

import numpy as np

import sys 데이터 셋 준비

import keras

fm = keras.datasets.fashion_mnist

(trainImage, trainLabel), (testImage, testLabel)= fm.load_data()

xTrain=trainImage.reshape(xTrain.shape[0],28,28,1)

xTrain=xTrain.astype("float64")

xTrain=xTrain/255

xTest=testImage.reshape(xTest.shape[0],28,28,1).astype("float64")/255

yTrain=np_utils.to_categorical(trainLabel,10)

yTest=np_utils.to_categorical(testLabel,10)

print(xTrain.shape)

print(yTrain.shape)

print(xTest.shape)

print(yTest.shape)

>>>

(60000, 28, 28, 1)

(60000, 10)

(10000, 28, 28, 1)

(10000, 10)

import matplotlib.pyplot as plt

plt.imshow(xTrain[:,:,:,0][0], cmap="Greys")

plt.show()

라이브러리 호출

from keras.models import Sequential

from keras.layers import Dense

from keras.callbacks import ModelCheckpoint, EarlyStopping

import numpy as np

import tensorflow as tf

import os

from keras.layers import *모델 생성

# 데이터 형상 관련 상수 정의

IMAGE_WIDTH=28

IMAGE_HEIGHT=28

IMAGE_SIZE=(IMAGE_WIDTH, IMAGE_HEIGHT)

IMAGE_CHANNEL=1

# 레이어 1

model = Sequential()

model.add(Conv2D(32, kernel_size=2, activation="relu", input_shape=(IMAGE_HEIGHT, IMAGE_WIDTH , IMAGE_CHANNEL)))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=2))

model.add(Dropout(0.25))

# 레이어 2

model.add(Conv2D(64,kernel_size=2, activation="relu"))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=2))

model.add(Dropout(0.25))

# 레이어3

model.add(Conv2D(128, kernel_size=2,activation="relu"))

model.add(BatchNormalization())

model.add(MaxPooling2D(pool_size=2))

model.add(Dropout(0.25))

# Fully Connected

model.add(Flatten())

model.add(Dense(512, activation='relu'))

model.add(BatchNormalization())

model.add(Dropout(0.5))

model.add(Dense(10,activation="softmax"))모델 환경설정

# 모델 실행 옵션

model.compile(loss="categorical_crossentropy", optimizer="rmsprop", metrics=['accuracy'])

model.summary()

>>>

Model: "sequential_10"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_18 (Conv2D) (None, 27, 27, 32) 160

_________________________________________________________________

batch_normalization_20 (Batc (None, 27, 27, 32) 128

_________________________________________________________________

max_pooling2d_17 (MaxPooling (None, 13, 13, 32) 0

_________________________________________________________________

dropout_22 (Dropout) (None, 13, 13, 32) 0

_________________________________________________________________

conv2d_19 (Conv2D) (None, 12, 12, 64) 8256

_________________________________________________________________

batch_normalization_21 (Batc (None, 12, 12, 64) 256

_________________________________________________________________

max_pooling2d_18 (MaxPooling (None, 6, 6, 64) 0

_________________________________________________________________

dropout_23 (Dropout) (None, 6, 6, 64) 0

_________________________________________________________________

conv2d_20 (Conv2D) (None, 5, 5, 128) 32896

_________________________________________________________________

batch_normalization_22 (Batc (None, 5, 5, 128) 512

_________________________________________________________________

max_pooling2d_19 (MaxPooling (None, 2, 2, 128) 0

_________________________________________________________________

dropout_24 (Dropout) (None, 2, 2, 128) 0

_________________________________________________________________

flatten_7 (Flatten) (None, 512) 0

_________________________________________________________________

dense_11 (Dense) (None, 512) 262656

_________________________________________________________________

batch_normalization_23 (Batc (None, 512) 2048

_________________________________________________________________

dropout_25 (Dropout) (None, 512) 0

_________________________________________________________________

dense_12 (Dense) (None, 10) 5130

=================================================================

Total params: 312,042

Trainable params: 310,570

Non-trainable params: 1,472

_________________________________________________________________모델 실행

# reduceLROnPlateau

# : callback 함수의 일종, learning rate가 더이상 업데이트가 되지 않으면, 학습을 중단하여라

from keras.callbacks import EarlyStopping, ReduceLROnPlateau

earlystop = EarlyStopping(patience=10)

learning_rate_reduction=ReduceLROnPlateau(

monitor= "val_accuracy",

patience = 2,

factor = 0.5,

min_lr=0.0001,

verbose=1)

callbacks = [earlystop, learning_rate_reduction]

#모델 학습

history= model.fit(xTrain, yTrain,validation_data=(xTest,yTest),

epochs=30, batch_size=200, callbacks=callbacks)

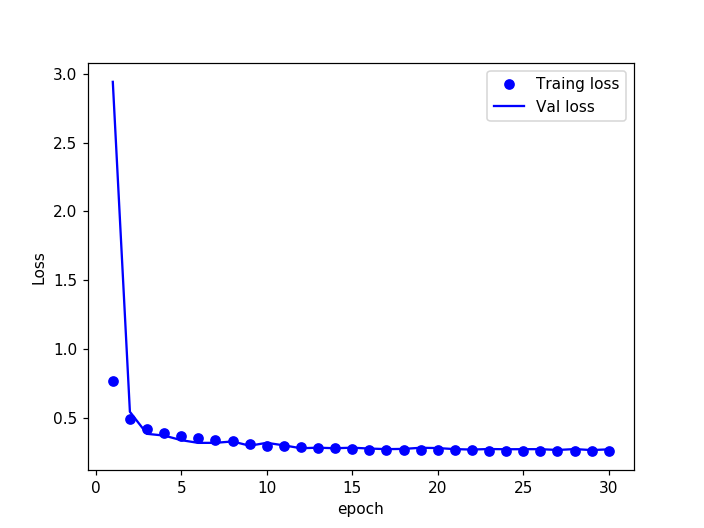

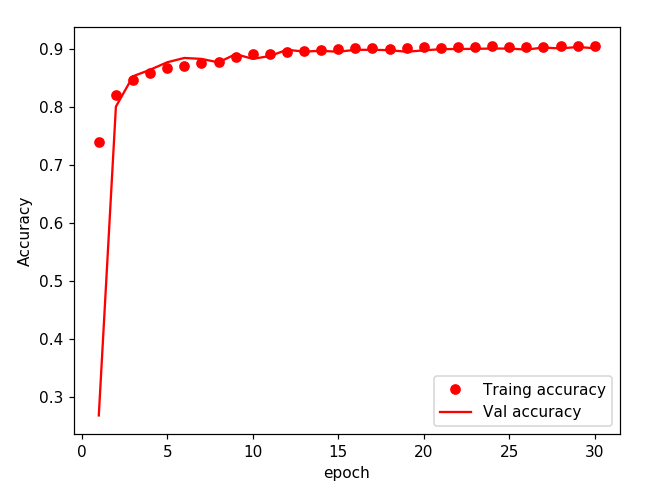

historyDict=history.history

acc=history.history['accuracy']

val_acc=history.history['val_accuracy']

loss=history.history['loss']

val_loss=history.history['val_loss']

%matplotlib notebook

epo = range(1, len(acc)+1)

plt.plot(epo, loss, 'bo', label="Traing loss")

plt.plot(epo, val_loss, 'b', label="Val loss")

plt.xlabel("epoch")

plt.ylabel("Loss")

plt.legend()

plt.show()

%matplotlib notebook

plt.plot(epo, acc, 'ro', label="Traing accuracy")

plt.plot(epo, val_acc, 'r', label="Val accuracy")

plt.xlabel("epoch")

plt.ylabel("Accuracy")

plt.legend()

plt.show()

모델 평가

# 모델평가

score = model.evaluate(xTest, yTest, verbose=0)

print('\n', '테스트 정확도:', score[1])

>>> 테스트 정확도: 0.901199996471405'노트 > Python : 프로그래밍' 카테고리의 다른 글

| [텐서플로우] MNIST 데이터를 이용한 RNN 모델 (0) | 2020.04.30 |

|---|---|

| [케라스] Fashion-Mnist 데이터를 이용한 분류 예측 (성능향상필요) (0) | 2020.04.28 |

| [파이썬기초] 파이썬 함수 활용 모음 (0) | 2020.04.27 |

| [케라스] 개와 고양이 이미지사진 분류하기 CNN모델 코드 (2) | 2020.04.27 |

| [케라스 오류 해결] TypeError: The added layer must be an instance of class Layer (0) | 2020.04.24 |